Path to Decentralization: View Change for Liveness and Security

Exploring the Significance and Implementation of View Change in Harmony's Consensus Protocol

Overview

Consensus protocols in a blockchain network are an essential procedure for the participants to reach a common agreement about the state of the network. Blockchain is a distributed system, thus there might be times when the consensus mechanism does not operate in the desired manner due to faulty participants.

As a network decentralizes and externalizes to a greater degree, these unexpected problems become more prevalent. In order to prevent the network from halting, a mechanism called view change is a necessity. In this article, we will explore Harmony’s implementation of the view change mechanism.

Background

Harmony employs Fast Byzantine Fault Tolerance (FBFT), an enhanced version of Practical Byzantine Fault Tolerance (PBFT), as its consensus protocol. The protocol consists of a leader who proposes a block with a batch of transactions and validators who, as the name implies, validate the legitimacy of the block. The protocol is composed of two modes: normal and view change.

In the normal mode, there is a series of steps in which a block is produced, validated, and committed by each participant (for an explained detail of the process, refer to Harmony’s Technical Whitepaper). The other mode is the view change mode. This mode is triggered by the validators if a block is not received within a designated timeframe or the block received is an invalid one. Regardless of the underlying factor, view change is initiated whenever the next block is unable to be committed. This is to prevent consensus congestion within the network. In other words, the view change mechanism guarantees the “liveness” of the network.

In the context of blockchain, liveness is a fundamental property that ensures that the network operates and makes progress ceaselessly. It refers to the requirement that the blockchain continues to grow without getting stuck or becoming stagnant. This essentially means that new blocks must be continuously produced and committed to the chain, allowing the chain’s height to increase and ultimately, the network to reach consensus on the latest state of the ledger. These properties are all guaranteed by view change.

Harmony’s Implementation

Every time a new view is started, whether it be from the previous committed block or a view change, a timer for the respective mode is started. The timers are stored in the Consensus struct as the consensusTimeout.

type Consensus struct {

....

// 2 types of timeouts: normal and viewchange

consensusTimeout map[TimeoutType]*utils.Timeout

....

} Validators: Starting a View Change

Within consensus_v2.go, there exists a Start() method for the Consensusstruct that runs the consensus logic and waits for the next block. Within 27 seconds (Harmony’s view timeout is set to 27 seconds), if the respective process is not completed and the corresponding timer times out, the startViewChange method is triggered as shown below.

if k != timeoutViewChange {

consensus.getLogger().Warn().Msg("[ConsensusMainLoop] Ops Consensus Timeout!!!")

consensus.startViewChange()

break

} else {

consensus.getLogger().Warn().Msg("[ConsensusMainLoop] Ops View Change Timeout!!!")

consensus.startViewChange()

break

}The process for startViewChange looks as follows:

consensus.consensusTimeout[timeoutConsensus].Stop()

consensus.consensusTimeout[timeoutBootstrap].Stop()

consensus.current.SetMode(ViewChanging)

nextViewID, duration := consensus.getNextViewID()

consensus.setViewChangingID(nextViewID)

// ...

consensus.LeaderPubKey = consensus.getNextLeaderKey(nextViewID)

// ...

consensus.consensusTimeout[timeoutViewChange].SetDuration(duration)

defer consensus.consensusTimeout[timeoutViewChange].Start()All timers nonrelevant to view change are stopped. Then, the required values, such as the mode, next view ID, and the next leader, are configured. The duration for the current view change is configured as well and we defer the timer from being started at the end of the logic (in Golang, the defercommand defers the execution of a function until the surrounding function returns). This ensures that the view change timer starts only after everything has been prepared.

members := consensus.Decider.Participants()

consensus.vc.AddViewIDKeyIfNotExist(nextViewID, members)

// init my own payload

if err := consensus.vc.InitPayload(

consensus.FBFTLog,

nextViewID,

consensus.getBlockNum(),

consensus.priKey,

members); err != nil {

consensus.getLogger().Error().Err(err).Msg("[startViewChange] Init Payload Error")

}Now the participants, or the members of the current validators, are added to the current viewChange struct. With all the configurations for the next view, along with the members, the payload to be included for starting the view change is initialized.

// for view change, send separate view change per public key

// do not do multi-sign of view change message

for _, key := range consensus.priKey {

if !consensus.isValidatorInCommittee(key.Pub.Bytes) {

continue

}

msgToSend := consensus.constructViewChangeMessage(&key)

if err := consensus.msgSender.SendWithRetry(

consensus.getBlockNum(),

msg_pb.MessageType_VIEWCHANGE,

[]nodeconfig.GroupID{

nodeconfig.NewGroupIDByShardID(nodeconfig.ShardID(consensus.ShardID))},

p2p.ConstructMessage(msgToSend),

); err != nil {

consensus.getLogger().Err(err).

Msg("[startViewChange] could not send out the ViewChange message")

}

}Then, the node constructs the view change message for all the public keys it hosts and sends it to the relevant group within the shard where the node is located. Once these logics have been triggered, assuming no error has occurred, the deferred logic for starting the view change timer is triggered. At this point, the initiation of the view change process is completed.

Note that since the view change timer has been started, the new view message from the new leader must be received within 27 seconds. If the new leader fails to do so or sends an invalid message, another view change will be started following the abovementioned logic. However, this time, with an incremented view ID.

New Leader: Starting a New View

First, a list of sanity checks is performed to: ensure that only the valid leader performs the logic, prevent the logic from running again if the quorum has been achieved, and verify that the received message is a valid one. Once all sanity checks are passed, the following logic is executed:

// already checked the length of SenderPubkeys in onViewChangeSanityCheck

senderKey := recvMsg.SenderPubkeys[0]

// update the dictionary key if the viewID is first time received

members := consensus.Decider.Participants()

consensus.vc.AddViewIDKeyIfNotExist(recvMsg.ViewID, members)

// do it once only per viewID/Leader

if err := consensus.vc.InitPayload(consensus.FBFTLog,

recvMsg.ViewID,

recvMsg.BlockNum,

consensus.priKey,

members); err != nil {

consensus.getLogger().Error().Err(err).Msg("[onViewChange] Init Payload Error")

return

}

err = consensus.vc.ProcessViewChangeMsg(consensus.FBFTLog, consensus.Decider, recvMsg)

if err != nil {

consensus.getLogger().Error().Err(err).

Uint64("viewID", recvMsg.ViewID).

Uint64("blockNum", recvMsg.BlockNum).

Str("msgSender", senderKey.Bytes.Hex()).

Msg("[onViewChange] process View Change message error")

return

}If the received message is the very first one, the new leader needs to update its viewChange struct with the update viewID and the members. Once the struct has been updated, the payload is initialized with the current configuration. Then, the received view change message is processed.

// received enough view change messages, change state to normal consensus

if consensus.Decider.IsQuorumAchievedByMask(consensus.vc.GetViewIDBitmap(recvMsg.ViewID)) && consensus.isViewChangingMode() {

// no previous prepared message, go straight to normal mode

// and start proposing new block

if consensus.vc.IsM1PayloadEmpty() {

if err := consensus.startNewView(recvMsg.ViewID, newLeaderPriKey, true); err != nil {

consensus.getLogger().Error().Err(err).Msg("[onViewChange] startNewView failed")

return

}

go consensus.ReadySignal(SyncProposal)

return

}

payload := consensus.vc.GetM1Payload()

if err := consensus.selfCommit(payload); err != nil {

consensus.getLogger().Error().Err(err).Msg("[onViewChange] self commit failed")

return

}

if err := consensus.startNewView(recvMsg.ViewID, newLeaderPriKey, false); err != nil {

consensus.getLogger().Error().Err(err).Msg("[onViewChange] startNewView failed")

return

}

}If the quorum has been achieved after processing the current message, the leader can transition from the ViewChange to the Normal mode. However, in doing so, the leader needs to notify the validators that it has completed the view change process with a new view change message. The message is constructed and broadcast only after ensuring that the payload has been initialized and committed. The transition and broadcasting logic are fulfilled in the startNewView method as shown below.

if !consensus.isViewChangingMode() {

return errors.New("not in view changing mode anymore")

}

msgToSend := consensus.constructNewViewMessage(

viewID, newLeaderPriKey,

)

if msgToSend == nil {

return errors.New("failed to construct NewView message")

}

if err := consensus.msgSender.SendWithRetry(

consensus.getBlockNum(),

msg_pb.MessageType_NEWVIEW,

[]nodeconfig.GroupID{

nodeconfig.NewGroupIDByShardID(nodeconfig.ShardID(consensus.ShardID))},

p2p.ConstructMessage(msgToSend),

); err != nil {

return errors.New("failed to send out the NewView message")

}

consensus.getLogger().Info().

Str("myKey", newLeaderPriKey.Pub.Bytes.Hex()).

Hex("M1Payload", consensus.vc.GetM1Payload()).

Msg("[startNewView] Sent NewView Messge")

consensus.msgSender.StopRetry(msg_pb.MessageType_VIEWCHANGE)First, the mode is checked to ensure that we are still in the appropriate mode. Then the leader constructs a new view message that will eventually be broadcasted with retries. The retry guarantees a successful message relay by attempting to broadcast it multiple times in case of failure. Once the message has been successfully sent out, the retry is halted.

consensus.current.SetMode(Normal)

consensus.consensusTimeout[timeoutViewChange].Stop()

consensus.setViewIDs(viewID)

consensus.resetViewChangeState()

consensus.consensusTimeout[timeoutConsensus].Start()

consensus.getLogger().Info().

Uint64("viewID", viewID).

Str("myKey", newLeaderPriKey.Pub.Bytes.Hex()).

Msg("[startNewView] viewChange stopped. I am the New Leader")

if reset {

consensus.resetState()

}

consensus.setLeaderPubKey(newLeaderPriKey.Pub)The mode is changed back to Normal and the view change timer is stopped. The viewID is updated and the view change state is reset as it has been completed. The normal timer is started and the node’s public key is set as the public key of the current view’s leader. With this, view change is completed and the normal mode is started with the new leader proposing blocks.

Proof of Test

View change has been part of the Harmony protocol since the very beginning. In the following section, we will initiate a view change within our Testnet to observe the real-time logic in action. Let’s dive into this process.

First, we will have to find out who the leader is. This can be done using the following command:

curl -d '{

"jsonrpc": "2.0",

"method": "hmy_getLeader",

"params": [],

"id": 1

}' -H 'Content-Type:application/json' -X POST 'https://api.s0.b.hmny.io'The output looks as follows:

{

"id" : 1,

"jsonrpc" : "2.0",

"result" : "one1yc06ghr2p8xnl2380kpfayweguuhxdtupkhqzw"

}The public key of the current leader shows as one1yc06ghr2p8xnl2380kpfayweguuhxdtupkhqzw. We will need to connect to this instance to proceed with the view change test.

The protocol’s current leader rotation logic is hard coded amongst the internal validators’ public keys. The leader is chosen only from those candidates (the leader candidates can be checked out in the protocol repository).

The intention was to establish the network’s foundation initially through internal validators. Once the network attained a sufficient level of stability and resilience, the subsequent step involved the process of complete externalization without full reliance on the internal validators. The objective has never changed and still remains to gradually transition towards complete decentralization, wherein the network is operated and governed solely by community validators. As the leader rotation externalizes, view change will assume a pivotal role.

Coming back to the test scenario, we will now ssh into the instance hosting the leader and verify that the validator is indeed the leader.

$ ./hmy utility metadata | jq -r '.result | "is-leader: \(.["is-leader"])"'

is-leader: trueAfter confirming, we will connect to the next possible leader candidate. We see that the next leader candidate is one1wh4p0kuc7unxez2z8f82zfnhsg4ty6dupqyjt2. However, the order follows a pattern in which the succeeding key would be part of the latter shard. Harmony’s Testnet has 2 shards, thus one1wh4p0kuc7unxez2z8f82zfnhsg4ty6dupqyjt2 would be part of Shard 1, and the subsequent one one1puj38zamhlu89enzcdjw6rlhlqtyp2c675hjg5, with the BLS key of 99d0835797ca0683fb7b1d14a882879652ddcdcfe0d52385ffddf8012ee804d92e5c05a56c9d7fc663678e36a158a28cwill be the actual succeeding leader candidate for Shard 0.

var TNHarmonyAccountsV1 = []DeployAccount{

{Index: "0", Address: "one1yc06ghr2p8xnl2380kpfayweguuhxdtupkhqzw", BLSPublicKey: "e7f54994bc5c02edeeb178ce2d34db276a893bab5c59ac3d7eb9f077c893f9e31171de6236ba0e21be415d8631e45b91"},

{Index: "1", Address: "one1wh4p0kuc7unxez2z8f82zfnhsg4ty6dupqyjt2", BLSPublicKey: "4bf54264c1bfa68ca201f756e882f49e1e8aaa5ddf42deaf4690bc3977497e245af40f3ad4003d7a6121614f13033b0b"},

{Index: "4", Address: "one1puj38zamhlu89enzcdjw6rlhlqtyp2c675hjg5", BLSPublicKey: "99d0835797ca0683fb7b1d14a882879652ddcdcfe0d52385ffddf8012ee804d92e5c05a56c9d7fc663678e36a158a28c"},

{Index: "5", Address: "one1x6meu5tqzuz0dyseju80zd2c2ftumnm0l06h4t", BLSPublicKey: "f441b75470919983ba18a0525b1c101af42cae052c6d50f74d1553eebbe78ef226849c5e5a7fb2ba563eec6b20380c00"},

{Index: "8", Address: "one1dpm37ppsgvepjfyvsamas25md280ctgmcfjlfx", BLSPublicKey: "8a211eb5e9334341fd2498fb5d6b922b4a0984d6a4ea0b5631c1904de5fe21fd6889c9c032d862546ca50a5c41294b0c"},

{Index: "9", Address: "one1mrprl7pxuqpzp0a84d5kd2zenpt578rr4d68ru", BLSPublicKey: "2e9aa982036860eccb0880702c5d71665761f8d4e6ab5f3d8c3aee25b3e68a2c7eaa3cd85972c7f9a3c19d3fed3d5d01"},

...

}We will now ssh into the instance and inspect that the instance does indeed have the desired key.

$ ./hmy utility metadata | jq -r '.result.blskey | "blskey: \(.[0])"'

blskey: 99d0835797ca0683fb7b1d14a882879652ddcdcfe0d52385ffddf8012ee804d92e5c05a56c9d7fc663678e36a158a28cAfter successfully establishing a connection with the candidate instance, we will proceed to restart the Harmony binary on the leader instance. This restart will trigger the initiation of the view change process. As the binary goes through the stop-and-start sequence, the leader temporarily suspends processing incoming messages, thus halting the consensus. Consequently, validators will begin transmitting new view change messages to the candidate once a timeout has elapsed. If a quorum is achieved within a given timeframe, the candidate will become the new leader. Let us observe if this is indeed what happens.

Once the Harmony binary on the leader has been restarted, the view change will kick in. Let’s look at the real-time log of the new leader (previously leader candidate). The logs contain an abundance of information, for simplicity, we will check out only the relevant ones to understand the process.

{"phase":"Prepare","mode":"Normal","message":"[ConsensusMainLoop] Ops Consensus Timeout!!!"}

{"phase":"Prepare","mode":"ViewChanging","message":"[getNextViewID]"}

{"phase":"Prepare","mode":"ViewChanging","message":"[getNextLeaderKey] got leaderPubKey from coinbase"}

{"phase":"Prepare","mode":"ViewChanging","message":"[getNextLeaderKey] next Leader"}

{"phase":"Prepare","mode":"ViewChanging","message":"[startViewChange]"}

{"phase":"Prepare","mode":"ViewChanging","message":"[constructViewChangeMessage]"}Once the consensus has timed out, the mode of the consensus will change to ViewChanging. The next view ID and next leader public key are fetched. With the received information, the view change message is constructed and broadcast. The above process is carried out by all the validators, thus the leader candidate will participate as well.

{"phase":"Prepare","mode":"ViewChanging","message":"[startNewView] Sent NewView Messge"}

{"phase":"Prepare","mode":"Normal","message":"[ResetViewChangeState] Resetting view change state"}

{"phase":"Prepare","mode":"Normal","message":"[startNewView] viewChange stopped. I am the New Leader"}

{"blockNum":12051992,"message":"PROPOSING NEW BLOCK ------------------------------------------------"}Now, these above logs pertain exclusively to the new leader as they are logic triggered by the view change messages that only the new leader will receive. Once the leader has received enough of the view change message, the new view message is constructed and broadcast to validators. Then, the consensus will be reset to theNormal mode and the confirmation message, claiming that the node itself is indeed the leader, will be displayed. At this point, the view change process is completed and the new leader continues the consensus by proposing new blocks!

{"phase":"Prepare","mode":"ViewChanging","message":"[onNewView] Received NewView Message"}

{"phase":"Prepare","mode":"ViewChanging","message":"[ResetViewChangeState] Resetting view change state"}

{"phase":"Announce","mode":"Normal","message":"onNewView === announce"}

{"phase":"Announce","mode":"Normal","message":"new leader changed"}For all other, non-leader validators, the above logs can be observed. After the new leader sends out the new view message, the message is received. Once the message is verified, the consensus is reset to theNormal mode and the Announce phase. Finally, a message claiming that the leader has changed is logged and the consensus is resumed. With this, the view change is completed on both sides of the leader and the validators.

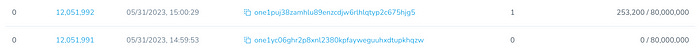

The leader change can be examined in the Testnet Explorer as well. Looking at the block history, we can observe that the view change has been processed successfully! The leader has changed from one1yc06ghr2p8xnl2380kpfayweguuhxdtupkhqzw to one1puj38zamhlu89enzcdjw6rlhlqtyp2c675hjg5 at block 12051992.

For more data regarding view change, check out our newly added metrics page in our explorer!

Conclusion

The view change mechanism is an essential component in Harmony’s consensus protocol. By employing the view change mechanism, the protocol prevents consensus congestion and ensures the liveness of the network by facilitating the continuous production and commitment of new blocks. View change will play a pivotal role in further advancing the network to complete decentralization.